As AI agents become more autonomous and take on increasingly complex tasks, adaptive learning becomes a core requirement. Adaptive learning is the ability to update behaviour based on new information. While model fine-tuning used to be the dominant mechanism for adaptation, modern agent architectures allow for more flexible and lightweight options that work in real time and without training a new model after every interaction.

Recently I have come across three practical ways to structure adaptive learning in agents. These methods can be used independently or combined to yield highly personalised, continuously improving systems.

The point here is to make AI interactions better. Either more personalised to users, or more successful outputs if using in agentic workflows.

1. Memory-Driven Adaptation (Static or Episodic Memory Stores)

This approach gives agents a memory module: a structured store of user preferences, constraints, facts, and past decisions. During inference, the agent retrieves relevant entries from memory and conditions its reasoning on them.

This is often implemented through vector databases, key-value stores, or graph-based memory.

How It Works

- The agent observes some new information (e.g., user behaviour, task success/failure).

- It stores distilled knowledge in memory, typically as:

- Episodic memory: “What happened”

- Semantic memory: “Generalised rules or preferences”

- When acting, the agent retrieves related memories and uses them to guide next actions.

Strengths

- No model retraining required

- Supports long-term personalisation

- Transparent and inspectable

Challenges

- Memory can grow unbounded unless summarised

- Needs a reliable retrieval mechanism

- If not carefully structured, the agent can over-generalise from isolated events

Best Use Cases

- Personalisation

- Multi-session assistants

- Domain agents with accumulative knowledge (research, sales, support)

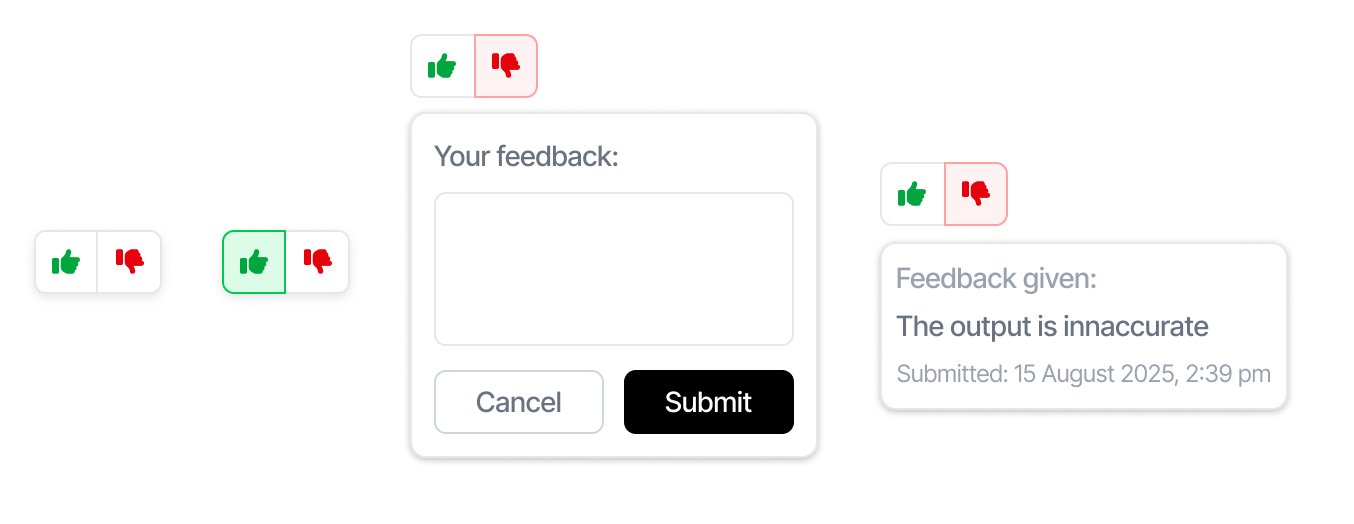

2. Feedback-Conditioned Adaptation (Using Thumbs-Up/Down + Comments)

User feedback is one of the most powerful adaptation signals available to agent designers. It provides direct, supervised signals about which decisions were good and which were not. Unlike implicit signals (e.g., click-through), explicit feedback gives clean, immediate corrections.

This method structures an adaptive learning loop around positive/negative reinforcement plus optional free-text comments.

How It Works

- After the agent produces an action or decision, the user can:

- Thumbs-up (positive feedback)

- Thumbs-down (negative feedback)

- Add a textual comment describing what was good or bad

- The system logs:

- Context (inputs)

- Agent reasoning trace (if permitted)

- Output/action

- Feedback signal

- When the agent faces a similar decision again, it:

- Retrieves prior examples with feedback

- Uses reinforced patterns to guide improved behaviour

This creates a localised form of reinforcement learning without training: the agent’s prompts, heuristics, memory records, or tools selection policy are adapted based on historical feedback.

Strengths

- Human-aligned: real users shape agent behaviour

- Fast iteration: improvements can emerge in minutes, not training cycles

- Highly interpretable: feedback entries can be inspected or aggregated

Challenges

- Requires a structured feedback schema

- Agents need retrieval logic to match feedback to new situations

- Risk of misinterpreting sparse or biased feedback

Best Use Cases

- Agents making subjective decisions (writing, summarisation, UX)

- Workflow automation systems where correctness matters

- Domain agents that improve through critique (coding assistants, tutoring agents)

Implementation Patterns

- Store feedback entries in a vector DB with metadata

- Include a retrieval step in the agent planning loop: “Before deciding, fetch any feedback relevant to this task or domain.”

- Use comments to generate explicit rules that become part of agent policy

3. Policy-Gradient or Rule-Learning Adaptation (Lightweight On-Policy Updates)

Some agentic systems implement a form of micro-learning where the agent updates its internal “policy”—a set of decision-making heuristics—based on observed outcomes.

This is not full reinforcement learning but rather incremental policy updates, often stored as rules, scoring functions, or updated planning heuristics.

How It Works

- The agent attempts tasks and records:

- Actions taken

- Intermediate states

- Success/failure metrics

- A policy engine evaluates these traces and updates:

- Tool selection priorities

- Planning templates

- Internal routing logic

- Future decisions follow the updated policy until new evidence suggests refinement.

Strengths

- Enables continual improvement without retraining

- Works well for multi-step autonomous agents

- More stable than traditional RL because updates are discrete and interpretable

Challenges

- Requires explicit success metrics

- Needs guardrails to avoid drifting into suboptimal policies

- Best suited for procedural or tool-driven systems

Best Use Cases

- Workflow orchestration

- Multi-tool agents (e.g., coding, research, operations automation)

- Agents that require efficient exploration/exploitation balance

Conclusion: Combining Methods for Robust Adaptive Agents

Modern agent architectures rarely rely on a single adaptation method. Instead, hybrid systems are emerging:

- Memory for long-term facts and preferences

- User feedback for fast behavioural shaping

- Policy updates for continuous optimisation of decision chains

By combining these three approaches, teams can build agents that are personal, reliable, and continuously improving—all while avoiding the cost and latency of repeated model fine-tuning.