Yes this is about generative AI. Large language models (LLMs) have become versatile tools capable of handling tasks ranging from creative writing to detailed technical analyses.

I wrote recently about whether chat interfaces are the right interface for LLMs, because that’s what most people see LLMs as. And so interface design is stuck in this world. However, to unlock their true potential, one of the most effective techniques is narrowing their focus — both in terms of the input they receive and the format of their output. This approach turns the LLM into a specialized assistant capable of delivering fast, actionable results.

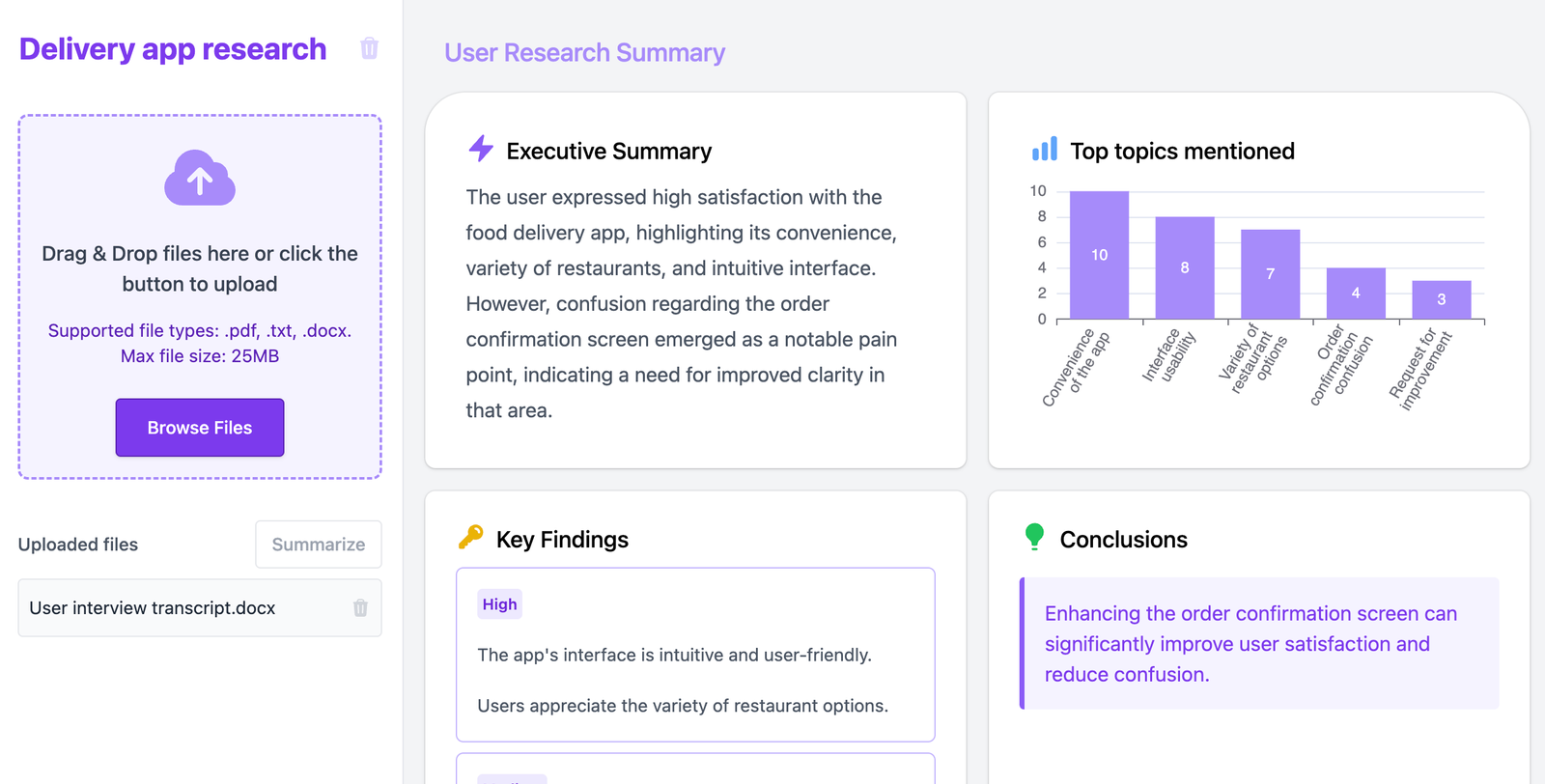

Let’s explore why this works so well, using a concrete example: summarizing user research into a preformatted template.

Why Narrow Inputs Are Powerful

LLMs thrive when given well-structured, specific data to work with. In user research, this might mean providing:

- Interview transcripts.

- Open-ended survey responses.

- Observational notes.

But instead of giving the LLM free rein over all this content, you can pre-process and structure it, for example, by dividing transcripts into chunks or tagging them by themes like “challenges,” “needs,” or “feedback.” This targeted input reduces ambiguity and ensures the LLM focuses on the right context.

Benefits of Narrow Inputs

- Increased Accuracy: Providing clear boundaries and context minimizes the chances of irrelevant or incorrect interpretations.

- Improved Efficiency: Smaller, structured datasets mean faster processing and quicker results.

- Scalability: When handling large-scale data, a consistent format enables automation without the need for constant reconfiguration.

Why Preformatted Outputs Are Game-Changing

On the output side, preformatted templates ensure consistency and clarity. For user research, you might use templates such as:

- Key Findings Summary:

**Challenge**: [Identified user challenge] **Need**: [User's unmet need] **Quote**: "[Relevant user quote]" - Topic Clustering:

**Theme**: [General theme] - Insight 1: [Specific insight] - Insight 2: [Specific insight]

With a defined template, the LLM focuses on filling in the blanks rather than generating freeform responses, leading to cleaner, more actionable outputs.

Benefits of Narrow Outputs

- Consistency: Whether processing one file or a hundred, the results adhere to the same structure.

- Interpretability: Stakeholders can immediately understand and act on the output without needing to decode it.

- Streamlined Post-Processing: Structured outputs are easier to integrate into reports, dashboards, or visualizations.

Real-World Use Case: Summarizing User Research

Imagine you’ve completed a round of user interviews and need to distill the findings into a report. Instead of manually sifting through hours of transcripts:

- Prepare Input: Divide the transcript into smaller chunks and tag each chunk with metadata (e.g., question type, topic).

- Define Template: Use a format like:

Theme: [Major theme] - Key Insight 1: [Insight related to the theme] - Key Insight 2: [Another insight] - Feed into the LLM: Ask the model to identify themes and extract insights for each.

In seconds, you have actionable summaries ready for your team. Want to add topic clustering or sentiment analysis? Just tweak the template.

Beyond Research: Applications of Narrow Inputs and Outputs

This approach isn’t limited to user research. It works across domains:

- Customer Support: Summarize customer feedback into “positive” or “negative” clusters with suggested action points.

- Content Creation: Generate SEO-friendly blog outlines based on predefined structures.

- Education: Create lesson summaries or highlight common areas where students struggle.

Why This Method Scales

At scale, narrow inputs and outputs are transformational. They enable organizations to process massive datasets quickly, reliably, and with minimal human oversight. Whether analyzing thousands of support tickets or synthesizing years of research into a single dashboard, this focused approach turns the LLM into a reliable collaborator rather than an unpredictable creative tool.

Final Thoughts

By giving an LLM a narrow scope — both in the input it receives and the output it generates — you can harness its full power in a fast, scalable, and reliable way. Whether you’re summarizing user research, clustering general topics, or automating repetitive tasks, this method ensures precision, efficiency, and consistency.

So next time you sit down to use an LLM, remember: less can be more. Provide some structure, and watch the magic happen.

If you want to have a go, have a look at my project from last weekend, Thematic Lens