AI has become a driving force in software from personalised recommendations to critical decisions in healthcare and finance. This creates a challenge for users: how do we ensure that AI systems remain understandable and trustworthy to the people who use them?

There are a few ways you can help build trust in AI applications, or to be less generic, “Complex Systems”. Here, I want to talk about explainability. By designing AI or complex systems that can clearly communicate their processes and decisions, we empower users, foster trust, and pave the way for ethical AI practices. In this post, we’ll explore how explainability can be designed into AI systems, drawing on insights from Google’s People + AI Research (PAIR) team.

IBM have labelled it as XAI: Explainable Artificial Intelligence.

What Is Explainability in AI?

At its core, explainability refers to the ability of an AI system to provide the user information into how it works and why it produces specific outputs. The goal: build trust. It answers questions like:

- “Why did the AI flag this transaction as fraudulent?”

- “How has the system come up with this forecast?”

I propose explainability can take two forms:

- Global explainability: Explains how the entire model operates (e.g., “This algorithm prioritises X factors”). An overview, with detail in parts.

- Local explainability: Clarifies why a specific decision was made (e.g., “This decision was influenced by factors A, B, and C”). Attached to specific outputs.

These explanations aren’t just technical necessities—they’re essential for fostering trust in AI systems. Imagine a self-driving car making a sudden stop. Without an explanation, users might mistrust the system. But if the car can explain that it detected an obstacle, trust can be preserved.

Trust is essential. What makes you trust technology like your car? People + AI Guidebook suggests it is ability (competence to get the job done); reliability (how consistent it is in its ability); and benevolence (is the system out for good)

Human-Centered Design Principles for Explainability

Designing for explainability isn’t just a technical challenge – it’s a human-centered one. Google’s PAIR team emphasizes the importance of crafting explanations that resonate with the people who interact with AI systems. Here are some key principles:

1. Transparency

People don’t need to understand how AI systems work, but they might want to try and understand how it got to an output. Transparency might involve explaining which factors influenced a decision or visualising how data flows through a model. You should show the underlying data easily and clearly. How does it get transformed? What alternatives have been considered? When was this model last edited? By who?

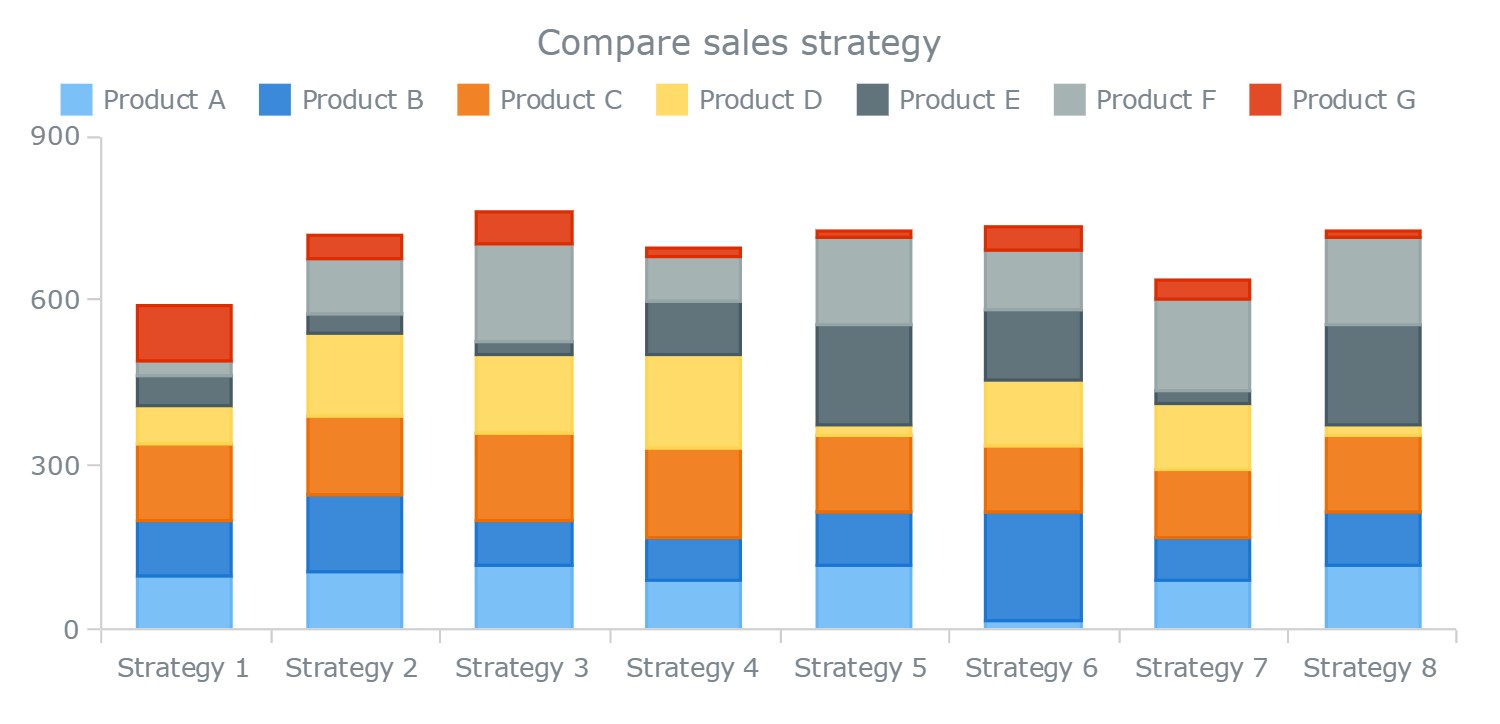

If you are presenting metrics, show a breakdown. What is this number made up of? Stacked bar charts are great for this. Users also can be reassured by seeing the data in tabular format as well. This generally leads onto the question… “Can I have a download to Excel button?”. But hey, why not. If it would let them interrogate the data themselves, go for it.

Have a look at these charts: stacked bar chart on the left to show a breakdown of a bigger number, and waterfall on the right to show how maybe a single number was calculated.

Another thought to show reliability is to show past predictions. Let the user scroll back to past outputs, and overlay what actually happened. Add event data to show what’s changed or happened since.

2. User Control – dynamic interactivity

Good design allows users to interact with the system and seek deeper explanations. For example, a user might want to adjust the weight of certain factors to see how it impacts recommendations.

Feedback could be a way to get users interacting with a system, asking users to rate a prediction or outcome to help influence future model outputs.

Tieing user actions to responses right away can also help explain why things are happening. Have a look at this dynamic interaction example of savings planner Wealthlytics. The tool allows user to drag their retirement age and immediately see the result on their savings.

It may sound obvious, but tooltips and drilldowns are almost expected to users nowadays. Showing the breakdown at a point in time will allow the user to interrogate the numbers themselves and count forward. Have a look at how Wealthlytics shows tooltips and the breakdown of a top level number into its subsequent components:

3. Appropriateness

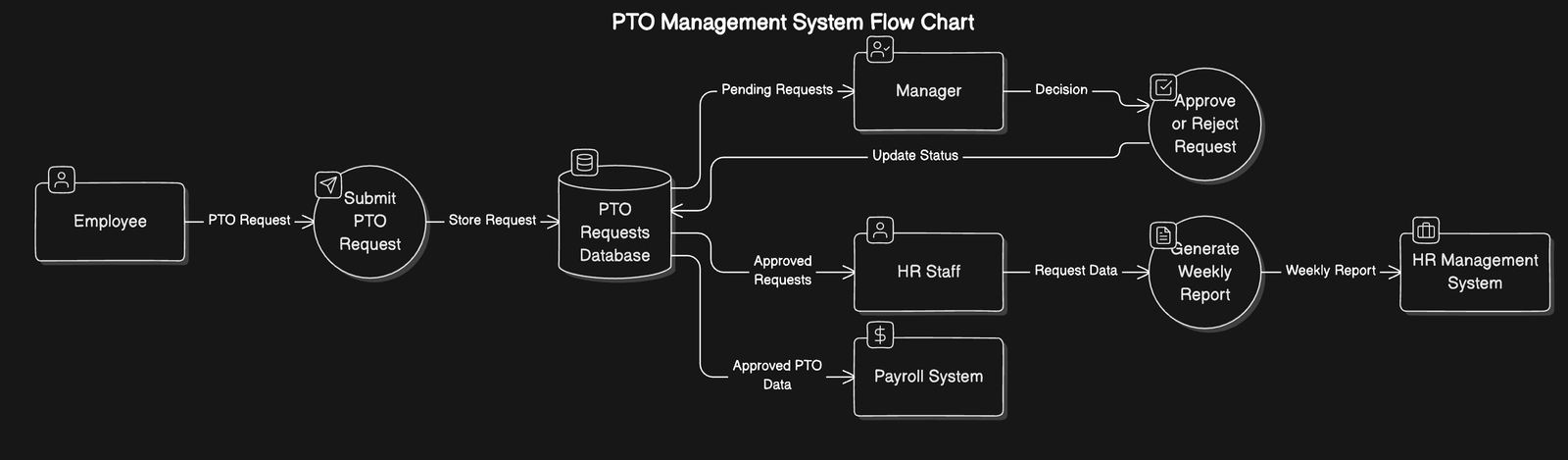

Explanations must be tailored to the user’s knowledge level. A data scientist might prefer confidence intervals, while a lay-user needs plain-language explanations and a chart type they recognise. Not all users will want to see a complex system diagram. There are also different levels of confidence display to consider, e.g. a simple percentage vs a categorised traffic light system.

These principles align with broader UX/UI design best practices, such as prioritising clarity and reducing cognitive overload.

Explaining outputs at the point of delivery is key here. However to get there, onboarding and marketing materials around the product build up to this point.

All of these tactics can help build and maintain trust.

Designing for Different User Types

Different users have different needs – you have to consider who you are designing for and what they need from explainability:

End Users: For everyday users, simplicity is key. For instance, a credit scoring app might show: “Your loan application was denied because your credit score is too low.”

AI Practitioners: Developers and data scientists need tools to debug and improve models. Tools like Google’s What-If Tool allow users to visualize how input data impacts predictions.

Regulators and Stakeholders: Policymakers and business leaders need detailed reports that explain an AI system’s behavior over time and its compliance with ethical standards.

By designing explanations with specific user needs in mind, we can make AI more transparent and useful for everyone.

Challenges and Trade-Offs

Designing explainability isn’t without its hurdles. Here are a few key challenges:

Balancing Simplicity and Accuracy: Simplifying explanations too much can lead to misunderstandings, while overly detailed explanations might overwhelm users.

Avoiding Explanation Fatigue: Too many details or long onboarding can frustrate users. Thoughtful design ensures explanations are concise yet informative.

Addressing Biases: Explainability often reveals biases in AI models. While this transparency is crucial, it can be challenging to address these biases effectively and fairly.

The PAIR team advocates for iterative design processes to navigate these challenges, incorporating user feedback and continuous testing to refine explanations.

Case Studies and Examples

Google’s PAIR research has produced tools and frameworks that make AI explainability tangible. One example is the What-If Tool, which lets users explore how models behave with different inputs. For instance, users can tweak factors like age or income to see how they influence predictions in a loan approval model.

Another example is Google Cloud’s Explainable AI platform, which provides feature attributions for predictions. This helps businesses and developers understand what drives an AI model’s outputs and refine it for better outcomes.

These tools illustrate how explainability isn’t just theoretical – it’s actionable, driving better decisions and increased trust.

The Future of Explainable AI

Explainability is more than a feature – it’s a necessity for ethical, human-centered AI design. As AI systems evolve, so must our approach to making them understandable. Emerging trends, like multimodal explanations and generative AI models that can create customised explanations, point to exciting possibilities.

But one thing remains clear: designing for explainability is an ongoing process. By embracing user-centric design principles and leveraging tools like those from Google PAIR, we can create AI systems that are not only powerful but also trustworthy and empowering.

Luckily, humans are forgetful and forgiving and will (hopefully) give you a couple of attempts to get this right.

Are you building or designing AI systems? Start by asking: How can I make this system explainable for the people who will use it? Adopting human-centered design principles and iterating based on user feedback is the first step toward creating AI that works for everyone.