“Enterprise AI strategy” is something you’d find on a consultant’s slide deck with lots of arrows, maybe a few buzzwords, and a roadmap that stretches into some hazy, futuristic quarter where everything just “works.”

But in reality, the organisations making the most meaningful progress with AI aren’t following 100-slide strategies. They’re building small, scrappy, useful things and then connecting them into something bigger. They are connecting railroads (AI) that go places.

That’s the heart of this post: a product-thinking, bottom-up, user-first approach to enterprise AI. Start with something real, something that touches real (production) data, something that actually helps someone do their job better. Then iterate. Don’t get lost in research notebooks or theoretical “AI Centers of Excellence.” Build. Ship. Learn.

Here’s one way to think about that journey, which might go out the window in a week because this stuff changes fast.

Step One: CustomGPTs — Prove the Possibility

Before you start talking about architecture or governance or “enterprise readiness,” you need to show people what AI can actually do.

CustomGPTs are the perfect entry point. They’re lightweight, flexible, and can be built without engineering overhead. Create one to help your support team respond to customer inquiries faster, or to rewrite technical documentation for non-technical audiences.

The goal isn’t to build a perfect system: it’s to spark that moment of realisation across your teams: “Oh. The model can do this.”

That’s product thinking at work. You’re not running pilots in isolation or demos in a vacuum. You’re giving people real, usable tools that immediately make sense in their day-to-day.

These GPTs are your proof of concept at scale: a low-risk way to test how LLMs fit into existing workflows and to learn what actually drives value.

Step Two: Automate the Flow with n8n to Cut Out the Copy-Paste

Once you’ve got a few CustomGPTs in play, you’ll start noticing something: people love them but feeding them takes the time and frustration.

That’s because most enterprise users end up juggling context between tools. Copying a chunk of CRM data into ChatGPT. Pasting the output back into a ticketing system. Downloading, uploading, repeating. We can go beyond chat interfaces!

This is where n8n comes in. Other AI workflow builders are available, but I find n8n strikes the balance between being easy to use but technical enough to actually work to get things done.

It’s the automation layer that connects your GPTs to the rest of your ecosystem. Think of it as the connective tissue that lets data flow in and out without human friction. Customer info from Salesforce? Pipe it in automatically. Responses or summaries? Send them straight to Notion, Jira, or Slack.

You’re moving from manual prompt engineering to operationalised intelligence.

Every workflow automated is one less reason for your users to disengage and one step closer to real, scalable adoption.

Step Three: Custom Interfaces and Robust Architecture — From Widgets to Systems

After your prototypes and automations come to life, you’ll start seeing the bigger picture emerge: it’s not about a handful of clever GPTs. It’s about how they fit into the broader system of how your company works.

This is when you evolve from ad hoc experiments to product-grade AI experiences.

Build custom interfaces that fit your users’ and organisation’s specific workflows. Move to a more robust, secure architecture – one that can manage permissions, context, and multi-source data reliably.

At this point, the focus shifts from “getting something working” to “making it foundational.” You start wiring in shared data lakes so that models have richer, real-time context. You invest in observability, performance, and security.

And suddenly, what started as a few isolated GPTs becomes a cohesive ecosystem where AI is embedded in every workflow, every department, every decision.

What’s important here is the mindset.

This isn’t about chasing an all-encompassing AI platform or waiting for the next shiny integration. It’s about thinking like a product team: build something valuable for one user, prove it works, and then connect the dots.

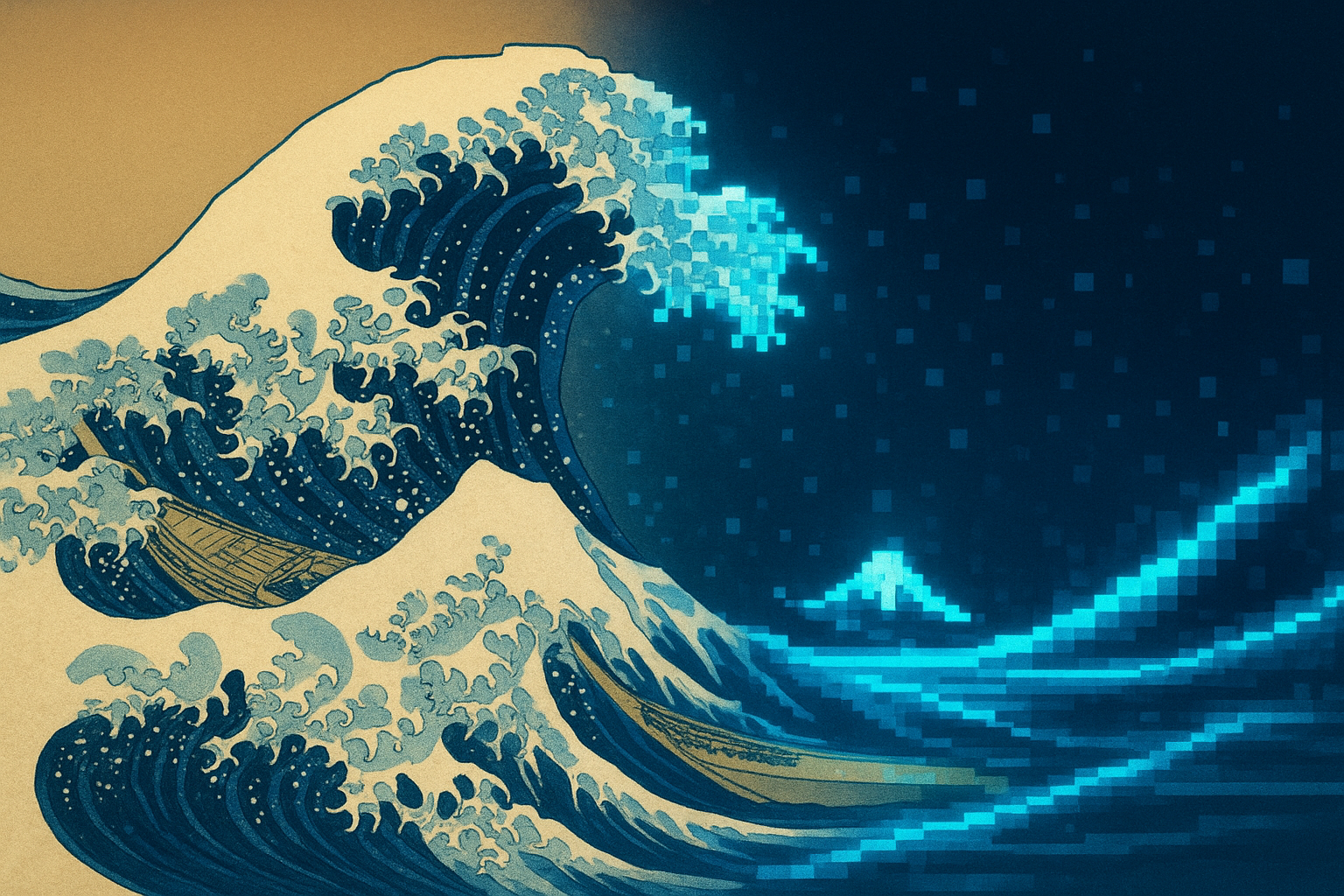

Individually valuable, collectively transformative. What is an ocean but a multitude of drops?

Each step adds a layer of depth and capability. Each prototype teaches you something. And because you’re using real data, in real environments, you avoid the trap so many fall into: the endless cycle of “AI experiments” that never quite make it out of a notebook.

So yes, this playbook might change next quarter. The tools will evolve, the models will improve, the architectures will get cleaner. But the core pattern will stay the same:

- Prove it with CustomGPTs.

- Scale it with automation (n8n).

- Embed it with custom interfaces and architecture.

That’s the enterprise AI strategy for right now. It’s iterative, it’s hands-on, and it’s grounded in reality.

Because transformation doesn’t come from vision statements. It comes from products people actually use.

And if you get that part right, the rest of your AI strategy has a funny way of writing itself.