Back in the early days of building apps, when we were still deploying things with FTP and debugging with alert(), one of the most thrilling moments was just watching someone use your thing. Not a simulated user, not a test suite, but a real human being poking around, getting confused, lighting up when something worked, or quietly recoiling when it didn’t.

That thrill? That awkward, amazing, nerve-wracking exposure? That’s feedback. And I’ve come to believe it’s the most underrated part of working with AI agents.

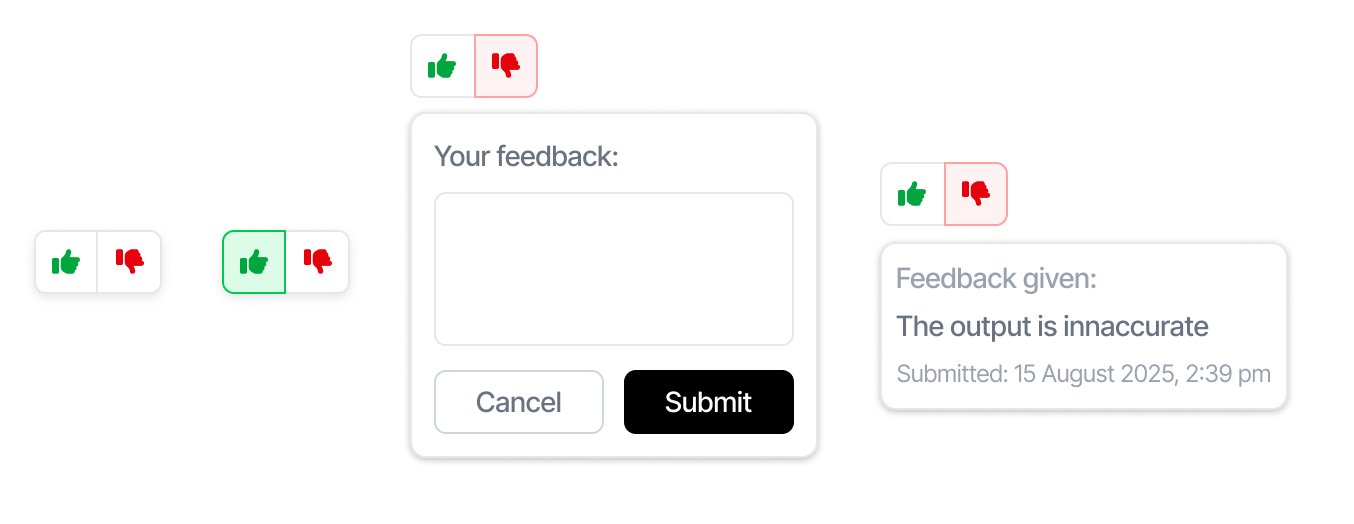

Recently, we added a dead-simple thumbs up / thumbs down component to our AI agent app. Nothing fancy. No LLM-driven summaries of feedback. Just two buttons—old-school like a YouTube video circa 2008. Click up if you like what the agent did, down if you don’t. That’s it.

You see, most of the evaluation we’d been doing up to that point relied on historical data and backtesting. Which, in theory, is great. You get a lot of signal. You can benchmark your agents. You feel scientific. But in practice? It’s a little like grading a performance based on a dress rehearsal. The data’s often messy, the context is incomplete, and the errors can be subtle but compounding.

Plus, no matter how precise your metrics are, they’re still talking to you, the builder. Not the person trying to get the AI to solve something they care about.

When we rolled out the feedback buttons, something shifted. Suddenly, we weren’t just evaluating output at a macro level – we were getting row-level truth. Step-by-step, we saw what users liked and didn’t like, where the agent took a weird turn, where it nailed the landing. There’s something incredibly grounding about seeing a downvote on a single action in a graph, knowing that this step – not the whole task – was the letdown. It gives the user agency. It gives the product direction.

It’s like getting Yelp reviews on your agent’s reasoning, one bite at a time.

And here’s the kicker: users actually used it. No long forms. No surveys. Just thumbs. And within a day, we had more honest signals than weeks of speculative testing could have given us.

I think there’s a lesson here, not just for AI builders but for anyone working on tools powered by complex systems. Don’t just optimize for elegance. Optimize for honest reactions. Put a button in. Let the user speak. Then listen.

So, if you’re building an AI app and wondering how to make it better, ask yourself:

Are you hearing from your users, or just guessing what they’d say?

Because in a world of agents, sometimes the most powerful intelligence still comes from a human with an opinion.